Article

Machine-learning methods are being actively developed for computed imaging systems like MRI. However, these methods occasionally introduce false, unexplainable structures in images, known as hallucinations, that can lead to incorrect diagnoses.

Researchers at the Beckman Institute for Advanced Science and Technology and the Computational Imaging Science Laboratory have defined a mathematical framework for identifying hallucinations, a first step toward reducing their frequency.

This work, “On hallucinations in tomographic image reconstruction,” is published in IEEE Transactions on Medical Imaging in a special issue on machine learning methods for image reconstruction.

Most modern medical imaging devices such as MRI, computed tomography, and PET do not record images directly. Instead, they employ a computational procedure known as image reconstruction. Researchers worldwide are seeking to develop improved image reconstruction methods that use deep learning to circumvent the limitations of traditional methods. Deep learning, like all machine-learning methods, allows algorithms to self-improve by learning from characterized datasets. Advanced DL-based methods can reduce imaging scan times and radiation doses. Although DL-based image reconstruction methods hold great potential for such purposes, they sometimes produce images that appear plausible, but contain false structures that may confound a medical diagnosis.

“This work is an excellent example of how our research group is making foundational contributions to the rapidly evolving field of imaging science,” said Mark Anastasio, the principal investigator of this study and head of the Department of Bioengineering at the University of Illinois Urbana-Champaign.

“Because our framework for analyzing hallucinations in biomedical images will enable researchers to quantitatively assess their image reconstruction methods in new and meaningful ways, we expect the impact of our study to be large."

Varun Kelkar (left) and Sayantan Bhadra (middle), who are co-first authors of this work, pictured with principle investigator Mark Anastasio (right). Bhadra is a student at Washington University in St. Louis, and has been a visiting scholar in Anastasio's group since 2019.Lead authors Varun Kelkar and Sayantan Bhadra, both graduate students in Anastasio’s group, anticipate that hallucination maps will help researchers and radiologists identify hallucinations and assess how detrimental they might be. Ultimately, this leads to a deeper understanding of how DL reconstruction methods should be trained to prevent patient misdiagnosis.

Varun Kelkar (left) and Sayantan Bhadra (middle), who are co-first authors of this work, pictured with principle investigator Mark Anastasio (right). Bhadra is a student at Washington University in St. Louis, and has been a visiting scholar in Anastasio's group since 2019.Lead authors Varun Kelkar and Sayantan Bhadra, both graduate students in Anastasio’s group, anticipate that hallucination maps will help researchers and radiologists identify hallucinations and assess how detrimental they might be. Ultimately, this leads to a deeper understanding of how DL reconstruction methods should be trained to prevent patient misdiagnosis.

“An effective reconstruction method is based on understanding the physics of the imaging system, but also uses realistic assumptions about how an object should appear,” Bhadra said. “DL-based image reconstruction methods are about designing the interplay of these elements by … training deep neural networks on large databases of previously existing clinical images.”

Though DL-based image reconstruction methods are gaining popularity in the field, evidence suggests that they may be unstable, meaning small changes to collected measurements could cause large differences in the resulting image. These challenges are exacerbated when image sampling is incomplete (in other words, when fewer raw imaging measurements are collected to reduce time and costs).

“Although DL has been shown to be useful in many contexts, there’s a lot going on inside these methods that are … still viewed as a black box by many researchers,” Bhadra said. “That makes it hard to tell when a medical abnormality might actually be a false structure created by the machine-learning method. The novelty of our work is in mathematically defining those false structures, which we call hallucinations.

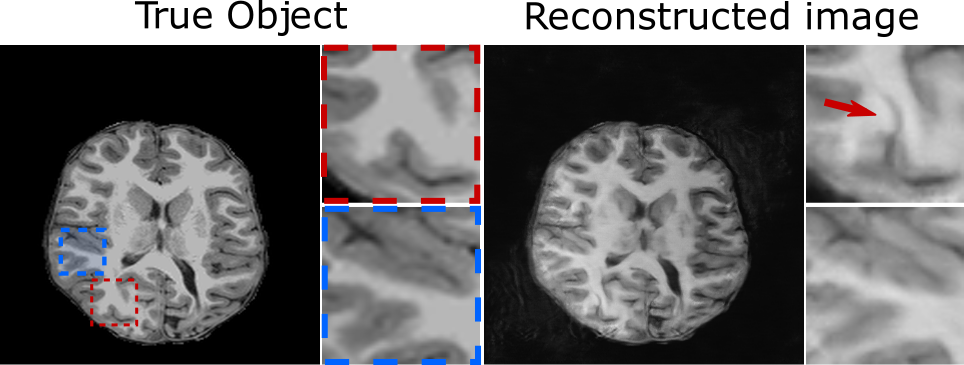

A schematic of hallucinations from DL-based reconstruction of a clinical pediatric MR brain image with training performed on adult brain images. The artifact outlined in red shows a realistic fold-like structure that has been hallucinated by the DL-based method, while the artifacts outlined in blue are from separate sources of scanner error.

A schematic of hallucinations from DL-based reconstruction of a clinical pediatric MR brain image with training performed on adult brain images. The artifact outlined in red shows a realistic fold-like structure that has been hallucinated by the DL-based method, while the artifacts outlined in blue are from separate sources of scanner error.Kelkar, Bhadra, and colleagues provide a formal definition of hallucinations based on fundamental principles of imaging science. Through numerical studies, they evaluated the presence and impact of hallucinations across both DL-based and non-data-driven reconstruction methods. These methods were used to reconstruct images of adult and pediatric brain tissue — with the DL-based method trained only on adult brain images — and evaluate the effect of bias from the training images.

“Our goal in mathematically defining hallucinations was to separate sources of systemic error, like measurement errors or noise, from errors that arise due to inaccurate assumptions about the to-be-imaged subject that are built into the image reconstruction method,” Kelkar said.

The impact of a generated hallucination is highly dependent on the type of tissue being imaged and the disease being monitored. Sophisticated image processing methods can be applied to identify how a particular diagnostic task may be affected by the presence of hallucinations.

“A hallucination could be something minor, such as a slight imperfection at the boundary of a tissue, or it could be something major, like introducing an entire fold of tissue in the brain,” Kelkar said.

Editor’s note:

The publication associated with this work can be found at https://doi.org/10.1109/TMI.2021.3077857

Frank Brooks, an assistant professor in the Department of Bioengineering at UIUC, also contributed to this work.

Contact Mark Anastasio at maa@illinois.edu

Contact the Beckman Communications Office at communications@beckman.illinois.edu

Beckman Institute for Advanced Science and Technology